Zynga Geo Proxy: Reducing Mobile Game Latency

Purpose

Zynga games have a wide global audience. Our main servers being in Oregon USA, the latency i.e. time for user devices to hit Zynga servers and back, especially for our global users is super high. Hence our aim was to reduce the latency for our end users.

Methodology

Our objective was to select a Zynga game that has a good percentage of global users and that makes heavy use of the HTTP/HTTPS protocol/s to talk to our Zynga servers, apply our solution and measure the latency delta. Selecting a live game would enable us to gather existing global latency data and also give us the ability to iterate quickly. It was important to avoid making big changes to the codebase so as to not negatively impact the selected live game. This would be followed by measuring user experience improvements via A/B testing.

Background

A] HTTP/S handshake

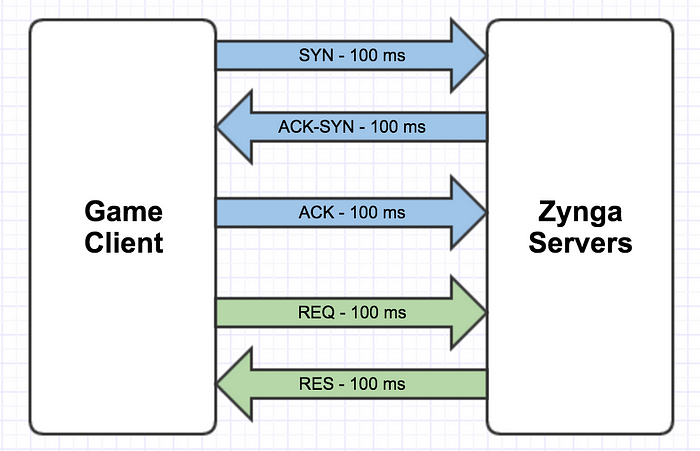

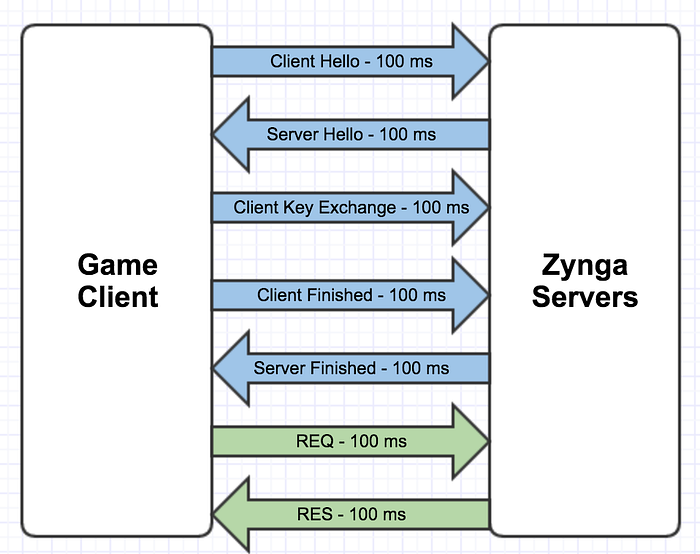

- The diagrams show the messages client and server must exchange in order to initialize a HTTP/S connection(blue arrows).

- Only after the handshake a client can start sending data to the server and get a response.

- Assuming that the round trip takes 100 ms it will be > 400 ms in both cases for a client to get any response back from the server.

- Note: This does not even consider the server processing time to respond to the request.

- This latency will be even higher for global users, far away geographically from Oregon, USA.

B] Latency

- Total latency for a HTTP/S call from a user device to a Zynga server is basically a combination of the following:

- Time to establish a connection

- Determined by network latency

- Time to send client data from device to our data center

- Determined by network throughput. May also be impacted minorly by network latency.

- This time includes any retransmission due to dropped packets.

- Time to process the request

- Determined by our data center performance.

- Time to send response back to the client

- Determined by network throughput. May also be impacted minorly by network latency.

- Historically, server teams at Zynga have looked at – ‘Time to process the request’

- Since these teams own the servers it was easy for them to focus only on this metric.

- This also indirectly represents overall health of the data center.

- However end user experience is directly tied to the total latency and not just a part of it.

- Also recently, some games in order to be ‘Apple App Transport Security’ compatible changed their calls from HTTP to HTTPS. As soon as this was done games started seeing a lot of user complaints that game features were slow. This was primarily due to increased latency.

- It became evident that first we would need to gather end to end latency data and then find a solution to the problem for games that wanted to stick to HTTP/S for data exchange.

C] Latency data gathering

- We added some code to measure end to end latency in FarmVille 2: Country Escape.

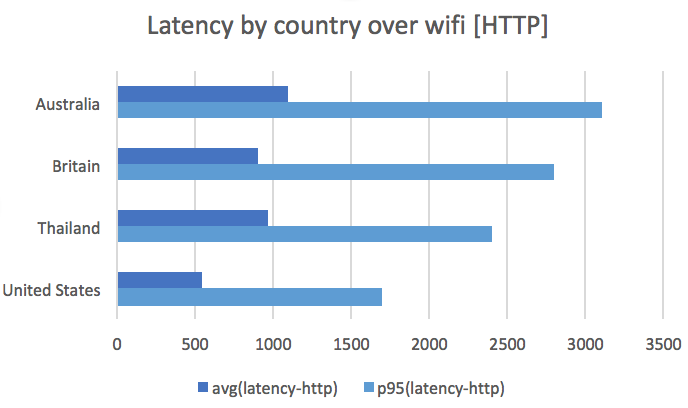

- Following graphs show total latency for some countries over wifi and HTTP/S:

- Observation:

- Looking at the graphs, (as expected) it is evident that HTTP latency is lesser compared to HTTPS.

- We compared our ‘Time to process the request’ numbers which we were historically recording, against these numbers.

- Looking at the delta we came to a conclusion that latency caused by data center processing is not the major contributor to the total latency.

- Infact, connection establishment and sending & receiving data takes a large part of the overall end to end latency.

- Also, latencies vary greatly from region to region.

Implementation Options

- HTTP Keep alive

- Implementing this would mean code changes to our client HTTP library. This would be a bigger code change than what we were comfortable with for this test.

- Another limitation of this approach is that the client needs to keep sending traffic to keep the connection alive. Basically, we would only see the benefit if the client sends requests more often than the HTTP keep alive timeout.

- Although this solution would have worked we parked it for the time being and started thinking about other possibilities.

- Data center replication in multiple geographic locations

- We would need to replicate our entire data center at various geographic locations.

- This solution would be super expensive.

- It can also introduce complications with clients moving across locations.

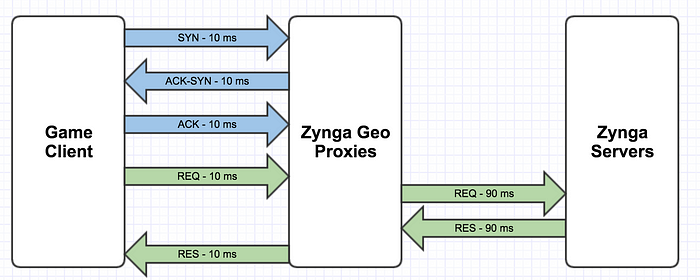

- Geo Proxy

- For this we would need to spin up proxy servers in various geo’s.

- These proxy platform servers would have a pre-established persistent connection with our Oregon servers.

- The connection establishment handshake latency would decrease with this approach.

Implementation Selection

Out of the three implementation options we had, we thought the ‘Geo Proxy’ option fitted well with our methodology. After finalizing this option the next step for us was to look at existing third party solutions. We looked at two options:

- Cloudfront from Amazon Web Services

- Since Zynga uses ‘Amazon Web Services’ products heavily, this seemed to be a good fit.

- However, Cloudfront has hard and fast rules for HTTP calls to follow the RESTful standards. Eg: GET requests should not contain any payload.

- We felt this to be restrictive as not all our microservices follow all the RESTful standards.

- Cloudflare

- The access pattern on the first game we wanted to try our tech on, did not work well with their default server timeouts.

- Our tests revealed that some calls were not making use of their pre established connections but in fact due to the server timeouts ended up establishing new connections.

- This would have not given us the latency gains we expected.

- This option would also be costlier compared to running our own custom solution.

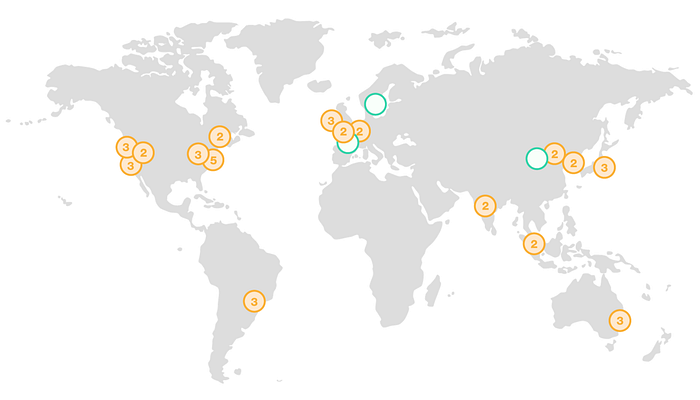

After ruling out existing third party solutions, we decided to come up with our own hybrid custom implementation. We decided to spin up a ‘lite’ version of our Zynga servers using ‘Amazon Web Services’ global infrastructure’. Then using ‘Amazon Web Services – Route 53’, global users would hit the nearest proxy Zynga servers and not our main Zynga servers in Oregon. This would give us the flexibility and control we wanted.

Solution

Geo Proxy

- We have deployed a simplified version of our Zynga Servers and deployed it at various geo locations.

- Virginia [North America]

- Frankfurt [Europe]

- Sao Paulo [South America]

- Sydney [Australia]

- Singapore [Asia Pacific]

- The game client communicates with the geo proxy and the geo proxy forwards traffic to our servers in Oregon.

- The connection handshake as well as sending and receiving data will now happen on lower latency geo connections.

- Thus, by placing proxies closer to clients, the latency of each round trip between the client device and the server becomes much shorter.

- The proxies communicate with Zynga Servers using pre-established, persistent connections, eliminating the overhead of establishing new connections.

- This significantly reduces the total latency per call.

- Currently this solution is limited to regions where AWS data centers are present.

Results

A] Latency results

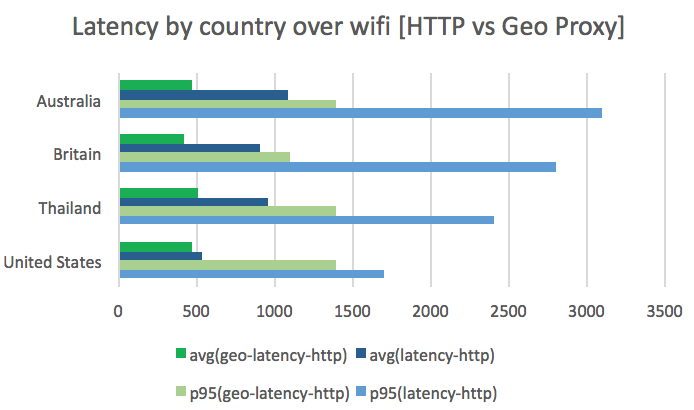

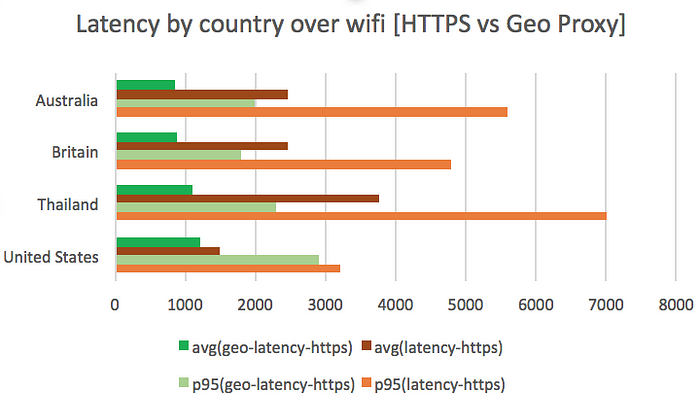

- Following graphs show total latency for countries with and without geo proxy over wifi and HTTP/S:

- Conclusion:

- For regions outside North America, we can clearly see reduction in total latency when user devices are connecting to local geo proxies.

- Geo proxies become less effective within the US as our main servers are in the Oregon data center. However, it should be noted that there is still an upside using geo proxies.

B] Game results

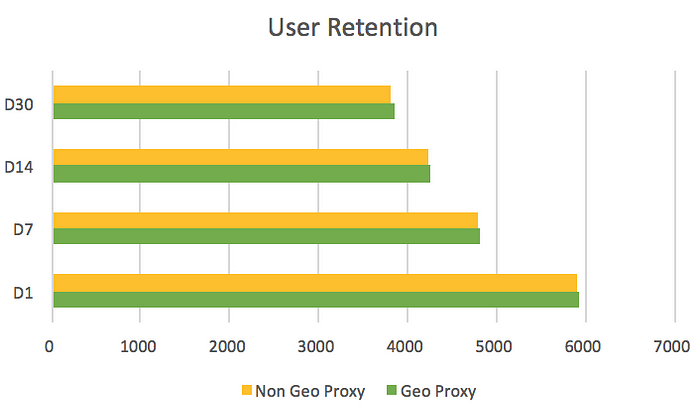

- User retention

- We A/B tested user retention by bucketing users into geo proxy and non geo proxy solutions.

- We have seen a consistent increase in user retention across D1 through D30 by 30 basis points for users on the geo proxy solution.

- Social feature responsiveness:

- FarmVille 2: Country Escape has social features like trading, alliances etc.

- Global user devices on both HTTP/HTTPS without geo proxy had high latency while fetching data from the server for these features. (especially when using HTTPS)

- Thus, these game features appeared slow to our global users.

- A direct result of which was customer support ticket increase.

- After enabling the geo proxy solution, user complaints on “social features being slow” have reduced significantly.

- User sessions:

- We have seen a minor positive impact (1%) on sessions per user for users on the geo proxy solution

Conclusion

- Mobile games primarily making use of the HTTP/HTTPS protocol and on AWS or any other global cloud provider, should consider a geo proxy solution to reduce the latency for their end users.

- The user retention and user session upside clearly point to the fact that this solution will have a tangible positive impact on end users.

Appendix

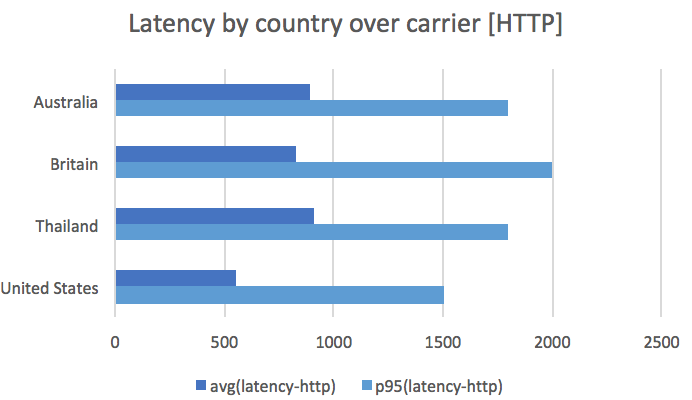

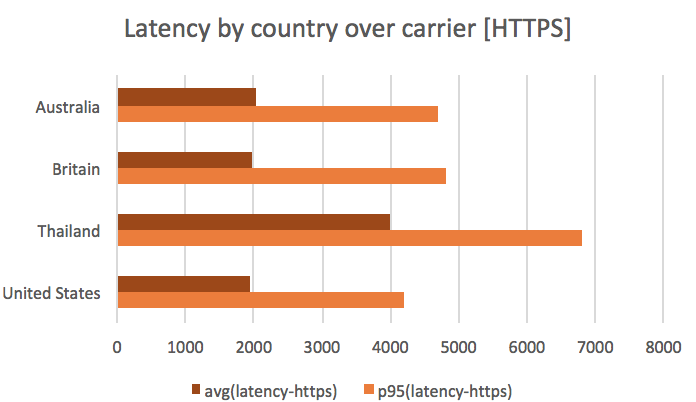

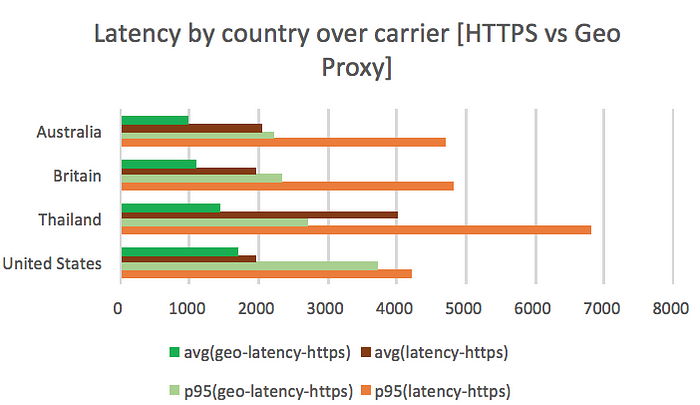

- We also wanted to measure latency numbers when a user device is not connected to wifi. Following graphs show the latency number over carrier HTTP/S. This is followed by graphs which show the comparison with geo proxy numbers over carrier.

- Following graphs show total latency for countries with and without geo proxy over carrier and HTTP/S:

- Time to establish a connection